Introduction

Every team exploring AI in 2025 eventually lands on the same debate: Open AI vs Sista AI, and more broadly whether to favor a generalist model or a voice-first, embedded agent. Market roundups consistently show OpenAI’s ChatGPT as the default choice for brainstorming, coding, and research, while open-source models like Mistral 7B and LLaMA 2 gain traction for local, private deployments. Yet what many product leaders actually need is not another chat tab, but a way for users to speak to their software and get actions done inside the interface. That is where the trade-off becomes practical rather than philosophical. If your goal is depth and breadth across diverse language tasks, OpenAI is hard to beat. If your goal is voice-driven UX, on-page automation, and accessibility, a specialized platform matters. Framed this way, Open AI vs Sista AI is less a rivalry and more a decision about where value is created: in the conversation, or in the interface it controls. Understanding that difference helps teams choose the right tool without overspending or overbuilding.

OpenAI Today: Strengths, Limits, and chatgpt voice

OpenAI’s ChatGPT, powered by GPT-4o, excels at reasoning, drafting, and code assistance, with a thriving ecosystem that connects to tools like Zapier, Google Docs, and Slack. For many workflows, it delivers immediate utility with minimal setup, which explains its continued dominance in general comparisons. Pricing is straightforward at $20/month for ChatGPT Plus, with API usage metered for apps that scale traffic. chatgpt voice brings natural speech in and out of the experience, making hands-free queries intuitive and fast. Still, its memory is session-bound for many use cases, so teams often re-establish context for projects that span days or weeks. It also shines more as a conversational surface than as a deeply embedded control layer that can click, type, or navigate your own UI. In short, when the problem is broad knowledge work, OpenAI is the safe, capable default; when the requirement is interface control, latency-sensitive handoffs, and on-page execution, a voice-first agent changes the calculus.

Sista AI in Focus: Voice, Automation, and Embedded Control

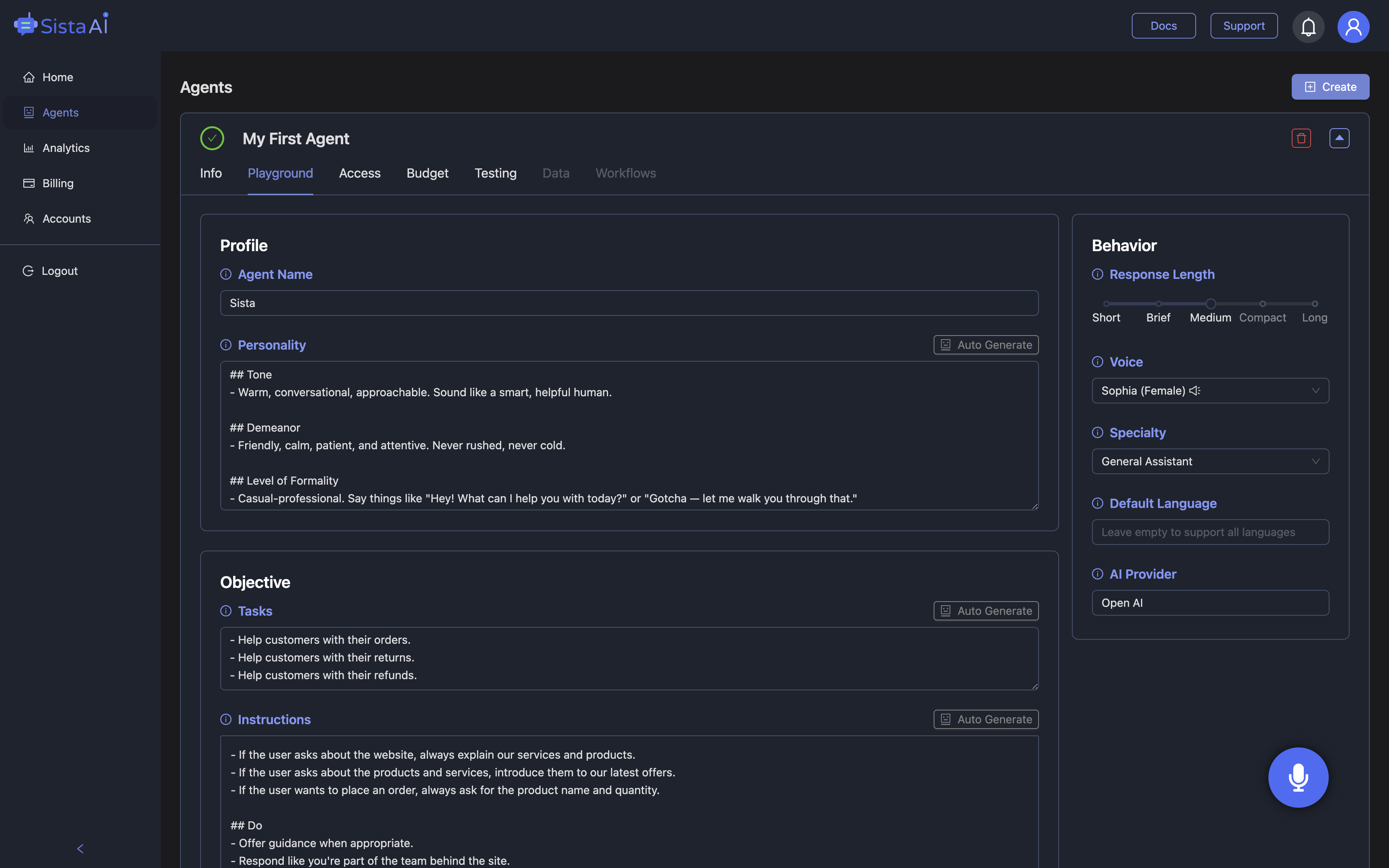

Sista AI was designed to put real-time voice interaction directly inside websites, apps, and platforms with minimal engineering lift. Its plug-and-play agents interpret natural language, then act: scrolling, clicking, typing, navigating, and triggering workflows in context. Beyond conversation, Sista AI supports over 60 languages, integrates a knowledge base with retrieval for domain-specific answers, and includes session memory so users don’t start from scratch every turn. An automatic screen reader summarizes on-page content in real time, while ultra-low latency keeps dialogue fluid enough for live assistance and guided tasks. Teams can embed via a universal JS snippet, use SDKs for frameworks like React, or install platform plugins for WordPress and Shopify. This enables practical use cases such as onboarding tours, form automation, or voice-led shopping without bespoke infrastructure. If you want to experience what an embedded, action-taking assistant feels like, try the Sista AI Demo and speak to your interface as if it were a teammate sitting beside you.

Real-World Choices: When Open AI vs Sista AI Makes Sense

Consider an e-commerce scenario: customers ask for “lightweight trail shoes under $120, size 9,” then want comparisons, cart edits, and checkout help. A general-purpose chatbot can answer, but a site-embedded voice agent can also filter, open product pages, add items, and progress checkout steps hands-free. In a SaaS onboarding flow, a voice-first agent can explain a setting, fill a form, and toggle options on the user’s screen, cutting time-to-first-value for non-technical users. Healthcare portals can use voice for triage and accessibility, reading pages and guiding patients through intake forms, while education platforms benefit from instant Q&A tied to course materials and on-page actions. Open AI vs Sista AI here is about placement and control: ChatGPT is a versatile co-pilot for thinking and drafting; Sista AI is an embedded operator that executes inside your product. If privacy is paramount and you want local control, open-source models like Mistral 7B are compelling for backend reasoning, while a front-end voice agent orchestrates user-facing steps. The best architectures often combine a reasoning engine with an execution layer, ensuring knowledge, action, and UX work in concert.

Conclusion: A Practical Path to Voice-First Adoption

Teams evaluating Open AI vs Sista AI should start with outcomes: do you need better ideas and documents, or faster in-product actions for real users? Map critical user journeys, label which steps require knowledge versus interface control, and aim for sub-second perceived response for voice experiences. Define success with concrete metrics like time-to-first-action, task completion rate, containment rate for support interactions, and accessibility coverage. Run a pilot on one high-impact page or flow, then expand once the value is measurable. OpenAI remains the gold standard for broad, general-purpose intelligence; Sista AI specializes in voice UI, automation, and accessibility directly inside your product. If you want to see embedded voice and on-page control in action, take the Sista AI Demo for a spin. When you’re ready to deploy to production with a no-code dashboard and flexible SDKs, you can sign up and launch your first live agent in minutes.

Special Offer

Get free credits — Start with Sista AI

Just want to try it? Use the Browser Extension

For more information, visit sista.ai.