Why Nano Banana Is Suddenly Everywhere

Photo editing is moving from filters to full-on creative direction, and Nano Banana is a big reason why. Google has quietly introduced this model in Search via Google Lens and AI Mode, letting people transform existing photos or generate brand-new images from a text prompt. Snap something you see, pick a shot from your gallery, or open the "Create image" tool and describe what you want; the system does the rest. Formally known as Gemini 2.5 Flash Image, Nano Banana can remix a single photo into countless variations and even blend multiple images into one coherent scene. It maintains character consistency, so a mascot, model, or product looks the same across edits. That’s why styled "3D figurine" photos are trending—one pose, many looks. The feature is rolling out in English in the U.S. and India first, with more regions to come. For creators, marketers, and small teams, this means faster content cycles with far less manual retouching. Pairing those visuals with voice-first tools makes ideation and iteration feel hands-free and immediate.

What You Can Actually Do With Nano Banana

Nano Banana is built for practical, repeatable output—think one product photo turned into a dozen seasonal banners, each with consistent lighting and proportions. It can blend a lifestyle background with a studio shot, or swap accessories while preserving your subject’s face and pose. The model’s creative control is more precise than earlier releases, so you can request targeted transformations like "make the sky overcast, keep the jacket color, add soft shadows." A toy brand might take one neutral figurine shot and render ten playful diorama scenes for social in under an hour. The cost is approachable for teams: through the Gemini API pricing, each image equates to roughly 1,290 output tokens, or about $0.039 per image, so 100 images land near $3.90. Access is flexible too—developers can use Google AI Studio or enterprise teams can deploy via Vertex AI. In Search, casual users can explore edits right inside Google Lens and AI Mode. As you scale, the key is creating prompt templates and style guides that your team can reuse reliably. Voice-guided prompts can make this even faster, especially when your tools support natural commands and precise UI control.

For Builders: Speed, Cost, and Team Workflows

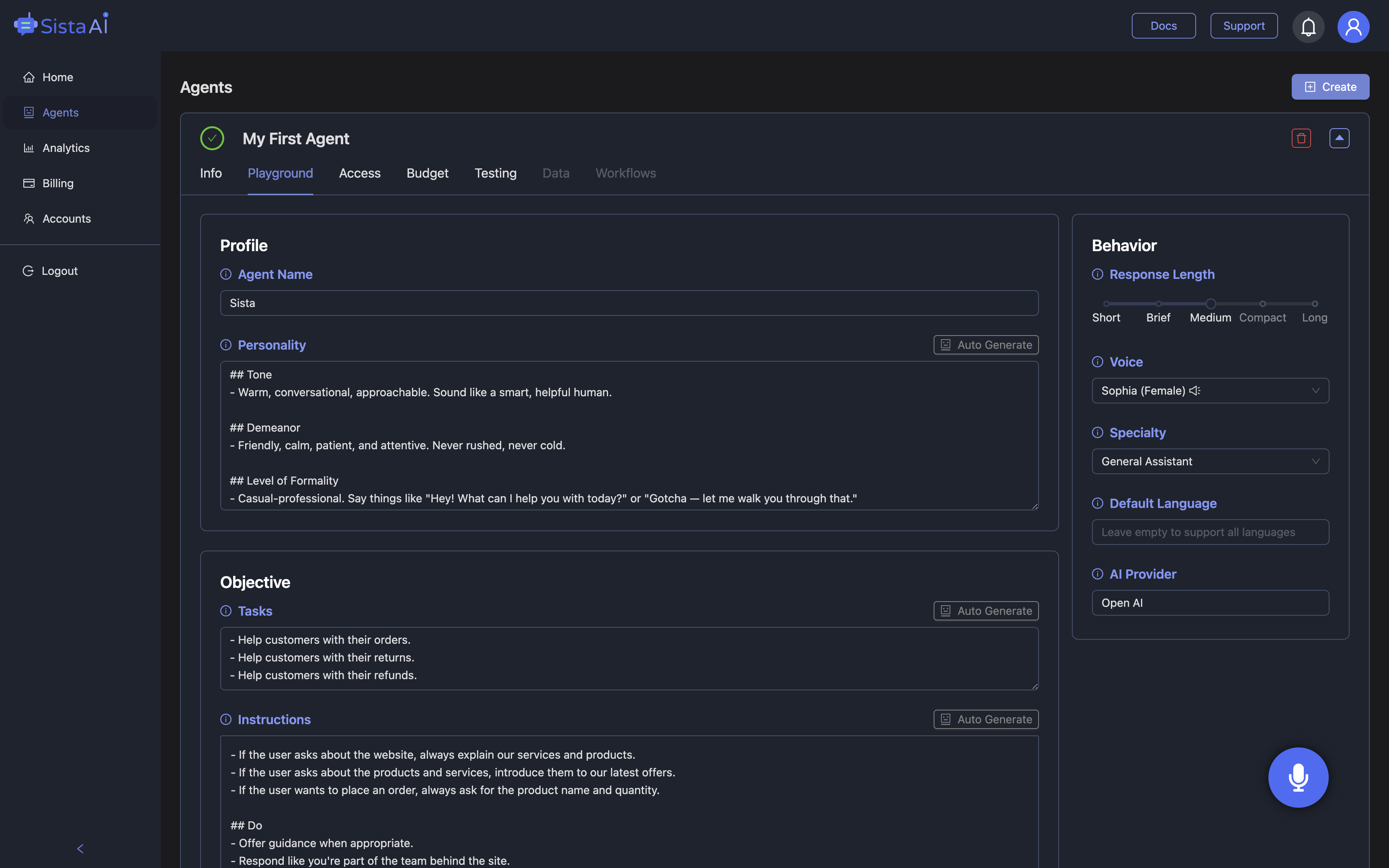

Under the hood, Gemini 2.5 Flash Image emphasizes low latency and higher-quality outputs, addressing a common request from earlier users. Developers can test and ship ideas in Google AI Studio’s build mode, then move to production via the Gemini API or Vertex AI. Two features stand out for teams: multi-image blending that keeps scene logic intact, and character consistency across a series—useful for storyboards, comics, product shots, or training materials. Picture an indie game team producing 200 storyboard frames of the same hero in different settings; consistency matters as much as style. With clear prompts and a simple pipeline, that afternoon sprint becomes plausible without blowing budgets. This is where voice and automation help: a voice agent can trigger batches, nudge parameters, or re-run a set with warmer lighting while you keep designing. Using a voice-first layer like the Sista AI Demo, teams can control web tools hands-free, generate descriptions, or file outputs to repos—reducing clicks and context switching. The result is fewer bottlenecks and a faster path from idea to approved asset.

Browser-Based Creation: Opera Neon, Consistency, and Voice Control

Opera Neon’s integration of Nano Banana makes quick generation and editing accessible in the browser, while previous options like Imagen 4.0 and Ultra remain available for flexibility. Designers benefit from scene and character consistency during iterative edits, which prevents the “drift” that breaks campaigns. Imagine a marketer on a live client call: they open Neon, adjust the background, keep the model’s look identical, and export variants before the meeting ends. Many people know the comfort of chatgpt voice for talking to an assistant; the same pattern now applies to visual workflows. With Sista AI’s voice agents, you can say “change the background to pastel, crop to 4:5, add soft vignette” and have the agent execute UI steps, fill forms, or submit prompts. The agent can also summarize on-screen results, compare versions aloud, and trigger multi-step automations. That reduces strain for multitaskers and increases accessibility for users who prefer or need voice navigation. To add a voice layer to your creative stack and manage it via a no-code dashboard, you can sign up for Sista AI in a few minutes.

Putting It All Together: Nano Banana + Voice-First Automation

Nano Banana democratizes high-quality image generation with better control, reliable consistency, and practical pricing. Teams that standardize prompts, styles, and approvals can scale content without sacrificing quality. Adding voice-driven orchestration closes the loop: Sista AI’s plug-and-play agents handle voice UI control, real-time summarization, workflow automation, and even multilingual collaboration across 60+ languages. E-commerce teams can generate seasonal hero images, have the agent annotate alt text, and publish to a CMS—all in one guided flow. Support teams can produce help visuals and narrate updates for accessibility. Product squads can experiment faster, then push assets to GitHub or a design library with fewer clicks. If you want to see how voice agents streamline creative work, try the live Sista AI Demo and experience hands-free control in action. Ready to add voice and automation around Nano Banana and your other web tools? You can create your Sista AI account and deploy an agent on your site or app today.

Stop Waiting. AI Is Already Here!

It’s never been easier to integrate AI into your product. Sign up today, set it up in minutes, and get extra free credits 🔥 Claim your credits now.

Don’t have a project yet? You can still try it directly in your browser and keep your free credits. Try the Chrome Extension.

For more information, visit sista.ai.