From hype to working reality

If you’ve wondered how ai agent works beyond the buzzwords, start with a simple idea: an agent is software that observes, thinks, decides, and acts in a loop. In practice, that loop is powered by an LLM “brain” plus tools for perception and execution, especially when voice is involved. Modern agents follow four repeatable stages—perception, reasoning, decision-making, and action—so they can handle tasks from support triage to guided shopping. Voice experiences add speech recognition, real-time dialogue, and natural turn-taking, which explains the rapid interest in chatgpt voice and similar systems. The value is tangible: faster answers, 24/7 availability, and consistent quality. Businesses adopting voice-first agents report shorter wait times and higher task completion, especially for routine requests. For teams exploring production-grade voice, the same loop applies—only the inputs, tools, and constraints change. That’s where platforms like Sista AI make voice agents practical to deploy across websites and apps.

The loop: perceive, reason, decide, act

To understand how ai agent works in detail, follow the loop step by step. Perception begins by converting real-world or digital signals into usable context: microphones capture audio, speech-to-text transcribes words, and a perception module structures them for the LLM. Reasoning uses that structured context to analyze goals, check constraints, and plan next steps; today’s LLMs can also call tools mid-reasoning to fetch data, run code, or query knowledge bases. Decision-making selects the next best action using heuristics or utility functions, balancing accuracy, speed, and safety. Action executes through software interfaces—calling APIs, filling forms, or, in voice UIs, speaking back through text-to-speech. A feedback loop evaluates outcomes, updates memory, and retries if needed, improving reliability over time. Short-term session memory keeps a conversation coherent, while a knowledge base (often via RAG) grounds responses in company facts. For voice to feel natural, sub-second latency matters; many teams target under 300–500 ms for turn-taking. Sista AI’s real-time streaming pipeline is designed for this loop, pairing low-latency voice with workflow actions.

From goals to tasks: the planner inside

Another way to see how ai agent works is to focus on goal-driven planning. First, a user sets an objective, like “reschedule my appointment” or “compare two plans.” The agent then breaks the goal into a task list—verify identity, check availability, confirm preferences, and update records—prioritizing steps that unlock later ones. It gathers information from tools or other models, stores results in a working memory or knowledge base, and tracks progress. As each task finishes, the agent collects feedback, detects blockers, and revises the plan. Iteration continues until the goal is met or a human handoff is needed. Consider a refund scenario: the agent verifies order details, checks policy eligibility, calculates amounts, requests approval if required, and issues confirmation—while narrating steps in natural voice. Data handling and permissions limit what the agent can access, keeping sensitive workflows safe. This pragmatic planning model makes voice-first agents dependable in real business contexts.

Voice-first in practice

In e-commerce, a voice agent can filter products by intent, compare features aloud, and add items to cart without clicks; that’s where a voice UI controller shines. For support, it can authenticate a user, summarize the issue from the page, pull answers from a knowledge base, and open a ticket if resolution fails. In healthcare and education, voice improves accessibility—booking appointments, reading content, or navigating forms hands-free. While chatgpt voice showcases the conversational layer, production agents need tool access, guardrails, and on-screen control. Sista AI’s plug-and-play stack combines conversational understanding, workflow automation, on-screen actions like scroll or click, multilingual speech, and integrated RAG for accurate answers. It can even run code or summarize on-page content in real time, which reduces friction for users on any site. If you’d like to hear a live example and talk to an embedded agent, try the Sista AI Demo—it’s a quick way to experience the full loop in action.

Getting started and measuring impact

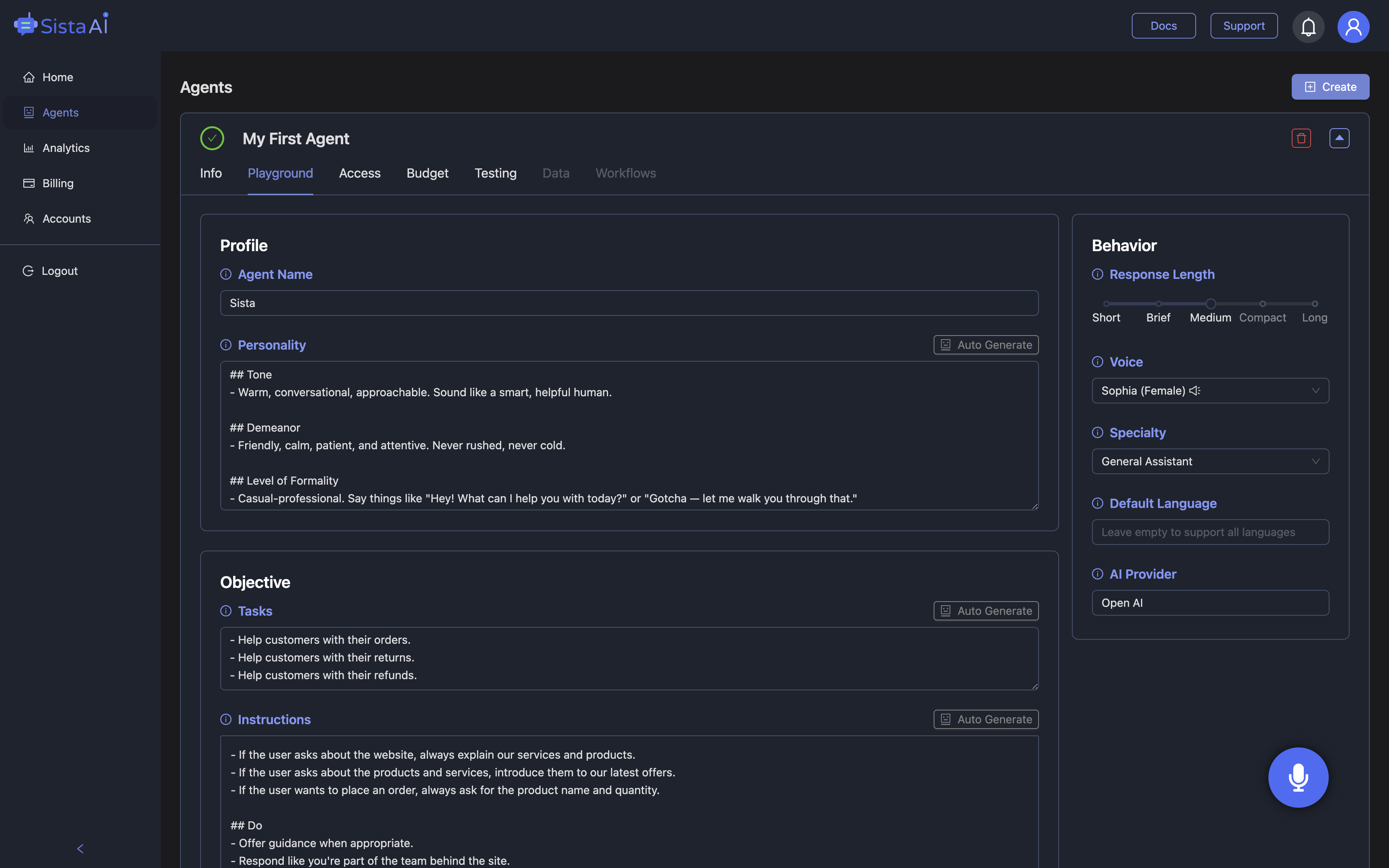

Success with voice agents starts with a clear scope: define the goals, map user journeys, and note the tools and permissions the agent can use. Identify the knowledge sources to ground answers and set guardrails for safety, compliance, and handoffs. Begin with one or two high-volume workflows—status checks, scheduling, or guided shopping—so you can measure impact on handle time, resolution rate, and CSAT. Pilot in a controlled segment, iterate using the agent’s feedback loop, and expand once metrics stabilize. Because implementation is often the hard part, Sista AI’s SDKs, universal JS snippet, and platform plugins (like Shopify and WordPress) help teams embed voice without heavy rewrites. When you’re ready to explore, take a few minutes to speak with the agent in the Sista AI Demo and see how the loop handles real tasks. And if your team wants to configure personas, permissions, and workflows for production, you can sign up and deploy a voice agent to your site in minutes.

Stop Waiting. AI Is Already Here!

It’s never been easier to integrate AI into your product. Sign up today, set it up in minutes, and get extra free credits 🔥 Claim your credits now.

Don’t have a project yet? You can still try it directly in your browser and keep your free credits. Try the Chrome Extension.

For more information, visit sista.ai.